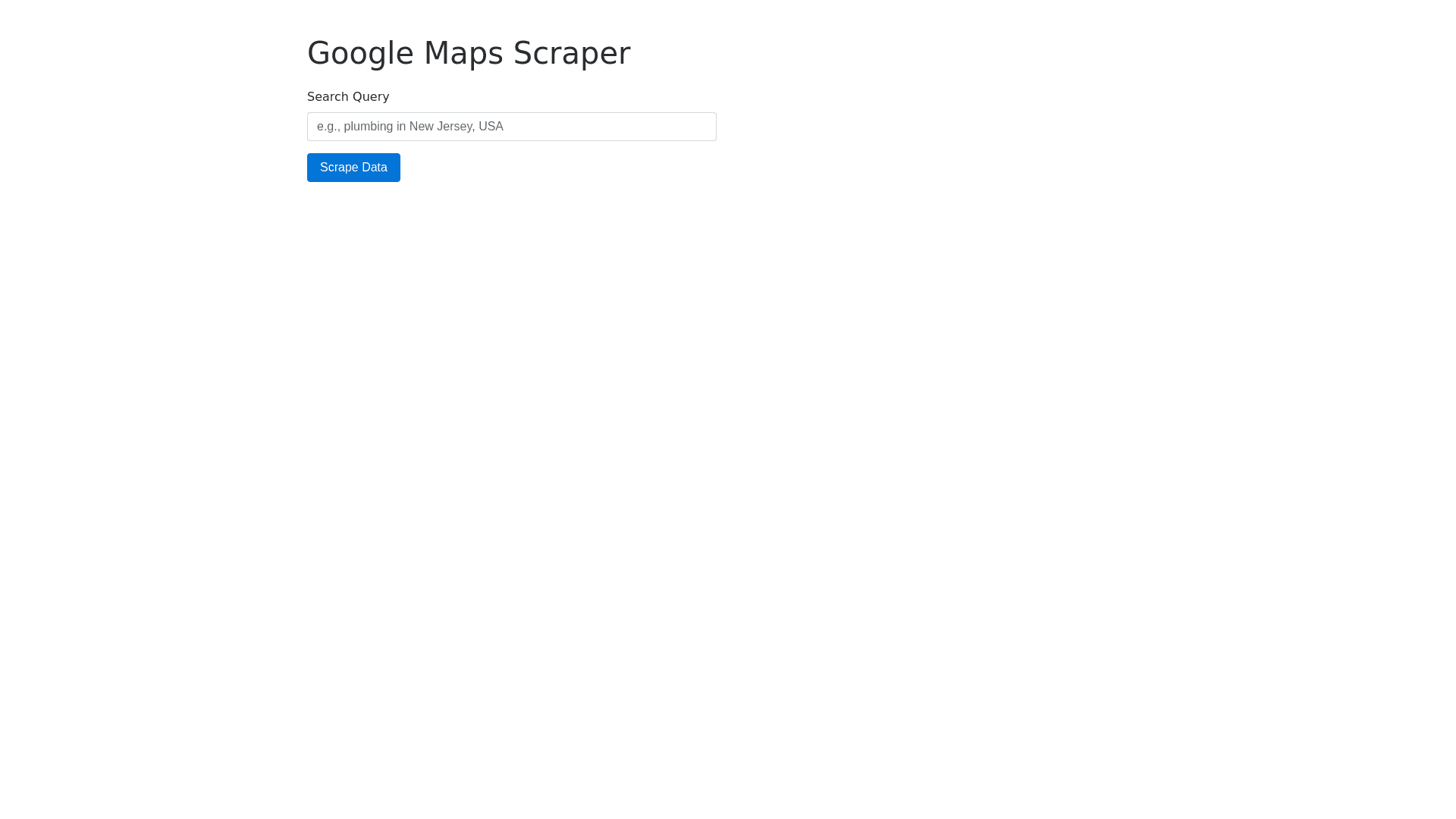

Maps Scraper Interface - Copy this Html, Bootstrap Component to your project

Use this code in a website to make a google map scraper "from selenium import webdriver from selenium.webdriver.chrome.service import Service from selenium.webdriver.common.by import By from selenium.webdriver.support.ui import WebDriverWait from selenium.webdriver.support import expected_conditions as EC from webdriver_manager.chrome import ChromeDriverManager import time import json import re import csv import pandas as pd options = webdriver.ChromeOptions() # options.add_argument(" start maximized") options.add_argument(" headless=new") driver = webdriver.Chrome(options=options) try: driver.get('https://www.google.com/maps/search/plumbing+in+New+Jersey,+USA/@40.4576266, 75.2915609,9z?entry=ttu&g_ep=EgoyMDI0MDgyOC4wIKXMDSoASAFQAw%3D%3D') try: WebDriverWait(driver, 5).until(EC.element_to_be_clickable((By.CSS_SELECTOR, "form:nth child(2)"))).click() except Exception: pass scrollable_div = driver.find_element(By.CSS_SELECTOR, 'div[role="feed"]') driver.execute_script(""" var scrollableDiv = arguments[0]; function scrollWithinElement(scrollableDiv) { return new Promise((resolve, reject) => { var totalHeight = 0; var distance = 1000; var scrollDelay = 3000; var timer = setInterval(() => { var scrollHeightBefore = scrollableDiv.scrollHeight; scrollableDiv.scrollBy(0, distance); totalHeight += distance; if (totalHeight >= scrollHeightBefore) { totalHeight = 0; setTimeout(() => { var scrollHeightAfter = scrollableDiv.scrollHeight; if (scrollHeightAfter > scrollHeightBefore) { return; } else { clearInterval(timer); resolve(); } }, scrollDelay); } }, 200); }); } return scrollWithinElement(scrollableDiv); """, scrollable_div) links=[] items = driver.find_elements(By.CSS_SELECTOR, 'div[role="feed"] > div > div[jsaction]>a') for i in items: links.append(i.get_attribute('href')) results=[] for link in links: data={} driver.get(link) time.sleep(3) try: data['title']=driver.find_element(By.CSS_SELECTOR, 'div[role="main"]>div>div>div>div>h1').text except Exception: pass try: data['Website'] = driver.find_element(By.CSS_SELECTOR,'a[data tooltip="Open website"]:nth child(1)').get_attribute('href') except Exception: pass try: data['Address']=driver.find_element(By.CSS_SELECTOR, 'button[data item id="address"]>div>div>div.fontBodyMedium').text except Exception: pass try: data['phone_no']=driver.find_element(By.CSS_SELECTOR,'button[data tooltip="Copy phone number"]>div>div>div.fontBodyMedium').text except Exception: pass try: data['plus_code']=driver.find_element(By.CSS_SELECTOR,'button[data tooltip="Copy plus code"]>div>div>div.fontBodyMedium').text except Exception: pass try: data['Rating']=driver.find_element(By.CSS_SELECTOR,'div[role="main"]>div>div>div>div>div>div>div>span>[role="img"]').get_attribute('aria label') except Exception: pass try: data['Reviews_Count']=driver.find_element(By.CSS_SELECTOR,'div[role="main"]>div>div>div>div>div>div>div>:nth child(2)>span>span').get_attribute('aria label') except Exception: pass try: url = driver.current_url data['GoogleMap_Url']=url regex = r"@([0 9. ]+),([0 9. ]+)" match = re.search(regex, driver.current_url) if match: latitude, longitude = match.groups() data['latitude'] = latitude data['longitude'] = longitude print(data) except Exception: pass if (data.get('title')): results.append(data) # with open('Leads.csv', 'w', newline='') as csvfile: # writer = csv.writer(csvfile) # writer.writerow(['title', 'Website', 'Address','Phone_No','Plus_Code','rating','recount','GLink','lat','Long']) # writer.writerows([results[0], results[1], results[2],results[3],results[4],results[5],results[6],results[7],results[8],results[9]]) print(results) df = pd.DataFrame(data=results) df.to_csv("bobo.csv") finally: time.sleep(5) driver.quit()"