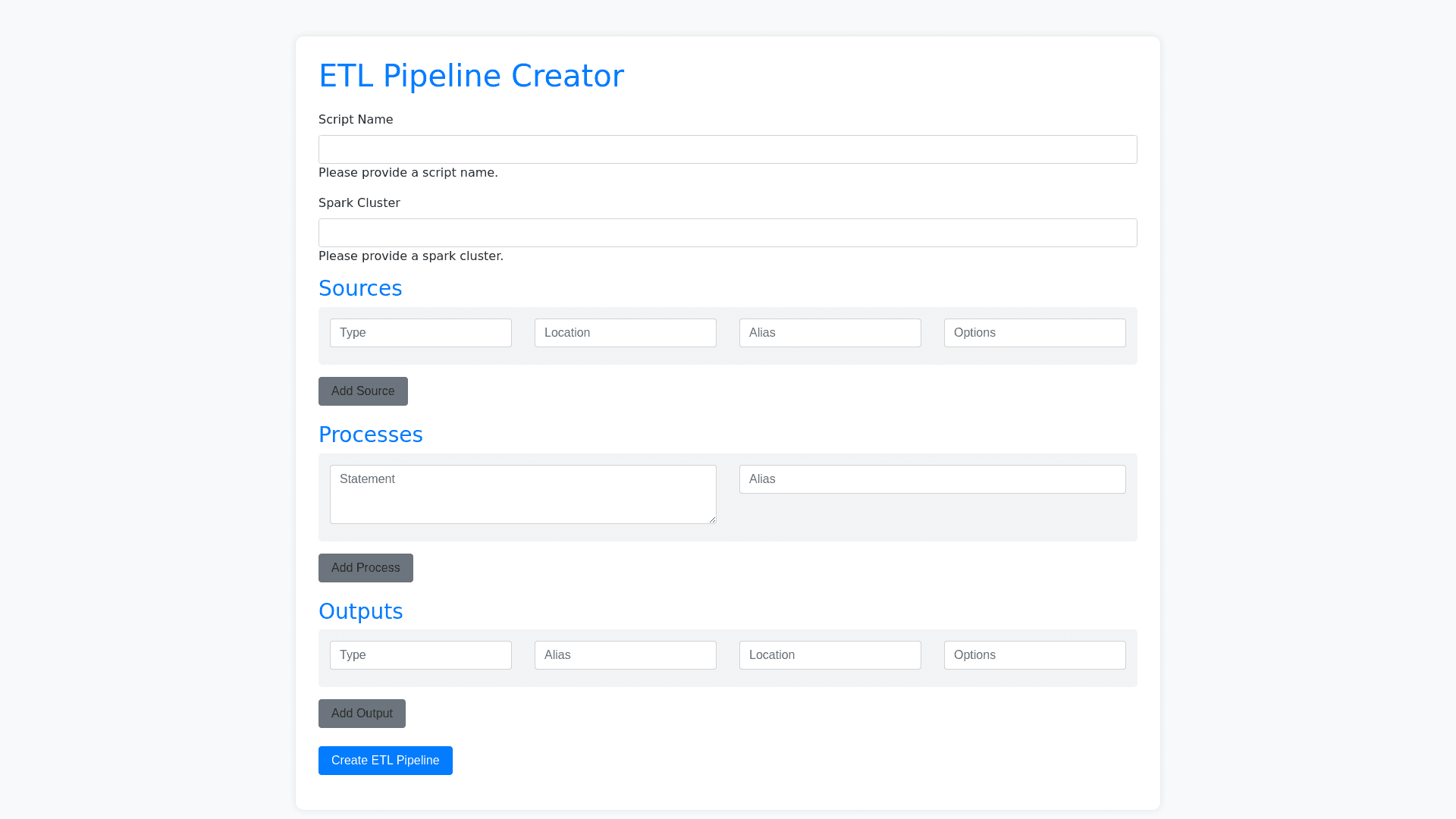

E T L Pipeline Creator - Copy this Html, Bootstrap Component to your project

Help me to generate an UI to input below JSON value to create an ETL pipeline in Spark, { "scriptName": "sink_jdbc_to_kafka", "sparkCluster": "spark 42114", "sources": [ { "type": "ORACLE", "location": "ora erp 49", "alias": "dfInput", "options": { "dbtable": "ERP.PM_SELLQUANTITY", "numPartitions": "60", "lowerBound": "0", "upperBound": "30000", "fetchsize": "10000", "partitionColumn": "STOREID" } } ], "processes": [ { "statement": "SELECT UUID AS key,TO_JSON(NAMED_STRUCT('\''UUID'\'',UUID,'\''STOREID'\'',STOREID,'\''PRODUCTID'\'',PRODUCTID,'\''ISFLAG'\'',ISFLAG,'\''INPUTTIME'\'',INPUTTIME,'\''SELLQUANTITY'\'',SELLQUANTITY,'\''_TIMESTAMP'\'',_TIMESTAMP,'\''DATE_KEY'\'',DATE_KEY,'\''TB30'\'',TB30)) AS value FROM( SELECT CONCAT(STOREID,'\''_'\'',TRIM(PRODUCTID)) AS UUID,CAST(STOREID AS INT) AS STOREID,TRIM(PRODUCTID) AS PRODUCTID,CAST(ISFLAG AS INT) AS ISFLAG,CAST(UNIX_TIMESTAMP(INPUTTIME,'\''yyyy MM ddHH:mm:ss'\'') AS BIGINT) AS INPUTTIME,SELLQUANTITY,UNIX_TIMESTAMP()*1000 AS _TIMESTAMP,CAST(DATE_KEY AS BIGINT) AS DATE_KEY,TB30 FROM dfInput) AS subquery ", "alias": "dfOutput" } ], "outputs": [ { "type": "KAFKA", "alias": "dfOutput", "location": "KAFKA 125", "options": { "topic": "hoodie source sellquantity" }, "saveMode": "append" } ] }